Please note that this blog is archived and outdated. For the most current information click here!

Dealing with Risk When Using Agile to Develop Software

Risk, it is everywhere and no matter how hard you try, it is impossible to escape. To deal with the inevitable risk that comes with the software development process, we must find ways to identify, mitigate and accurately allocate time to risk. This enables a team to keep to project timelines and not have them blow out. This article will outline how we approach risk throughout the various stages of agile development and the steps we take to de-risk our projects. There is risk under every stone throughout the software development process, so we will only be touching on a few of the places where risk is found and how best to deal with it.

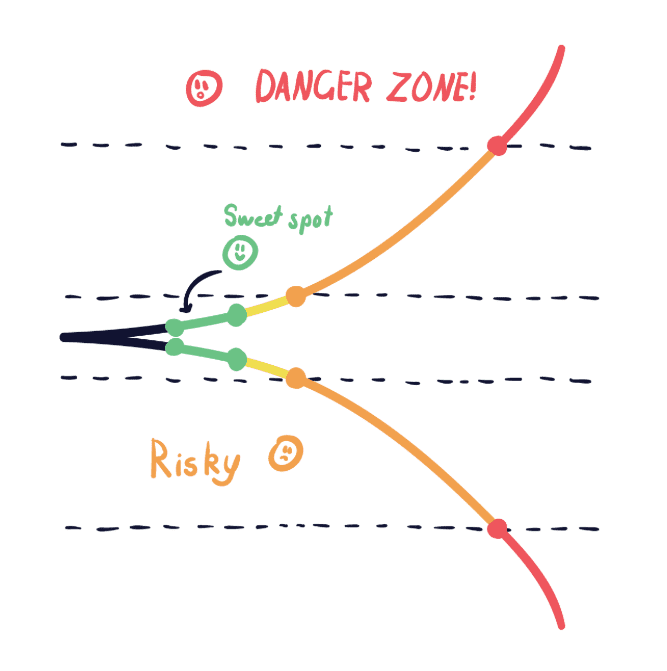

The Cone of Uncertainty

The beginning of any software development project is by far the riskiest time for all involved if not approached from the right angle. The Cone of Uncertainty (available below) is a depiction that as we go further into the future there is more that we are uncertain about. There are so many other factors that may impact a project in 6 months time that we just don't know about current. For example, Apple/Google may update their software requirements or a particular plugin we were planning to use has reached end of life.

This is why it's important to break down the project into smaller builds. Each build can be scoped at the relevant time allowing greater certainty around the estimated length. This is the premise of agile software development but it helps to understand the 'why.'

Our x-axis on the Cone of Uncertainty is the time in the future we are trying to predict. We can see that the further out from where we are currently, the more risk that is associated with that prediction. Our aim is to estimate out to our “sweet spot”. We find this is about 8 weeks into the future. This enables us to be confident in the estimations we make without taking on too much risk.

Using the cone of uncertainty in your projects

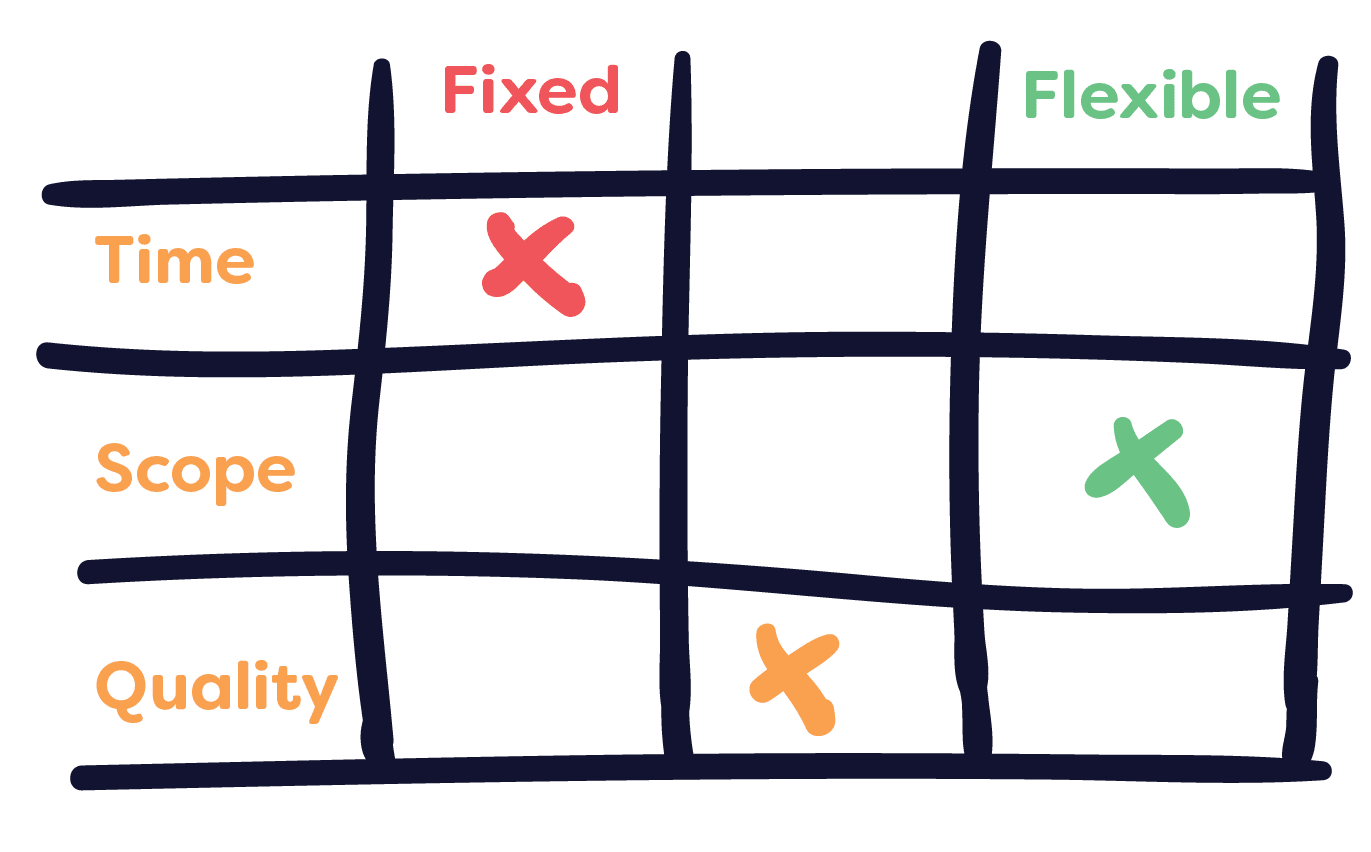

The cone of uncertainty isn't just a visual depiction of how risk is measured in software development, it can also be used as a useful tool in your arsenal to help explain the impact of the varying software development approaches, agile (fixed time or fixed scope) vs waterfall.

The cone of uncertainty is a great way to reinforce why agile is such a beneficial approach to software development. Utilising shorter iteration cycles working towards a milestone with scoping sessions to map out the next development cycle enables us to keep the estimations in that sweet spot throughout the life of project rather than estimating too far into the future.

We utilised this very approach with one of our clients. We were able to bring the Cone of Uncertainty into the conversation early on to show them the benefits of using an agile approach over fixed time and materials (waterfall). Often this perspective shift for clients away from having everything delivered at once to working iteratively is the most difficult to convey. The Cone of Uncertainty can make this discussion more visual and easier to digest the benefits of agile development through the eyes of risk. Understanding the Cone of Uncertainty and how it applies to a project is also beneficial to a client. When we can mitigate risk throughout the brief and scoping process, it generally reduces the estimation, which brings the overall cost of a project down.

Risk rating and tech spikes

The tech spike allowance creates a bank of time which can be drawn upon when the project team needs it. The tech spike allocation is used as a chance for the team to take time to research or test concepts before they estimate properly on something. Typically, it is a project team member that indicates the need for it, though all tasks with a risk rating higher than eight must be de-risked before estimating, which is almost always reduced through the completion of a tech spike.

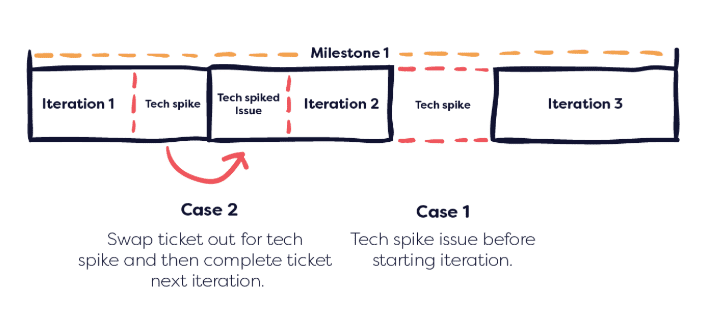

A tech spike can be included in the development process in two ways: Case 1- If the issue being estimated has a high priority and cannot be moved into another iteration, then the other option is that a tech spike for the issue can slot in before the next iteration as a chance to research before estimating. Case 2 - the issue can be moved into a later iteration, and a tech spike can be put into the next iteration in its place (this option doesn't interrupt the development flow).

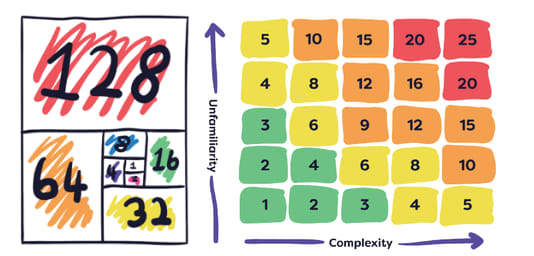

Our risk rating and its score is what will initiate a tech spike. As mentioned above, if the risk score associated with an issue is above an 8, a tech spike is required to de-risk the task before going into development. We apply a risk multiplier using the unfamiliarity and complexity of the issue to the team. This gives us our risk score out of 25. If this score is above an 8, a tech-spike is then required. We then use a Fibonacci like sequence to get the general size of the task which we multiply with our risk score to get our time estimation for that task.

Using risk rating and tech spikes

Risk rating and tech spikes are used extensively during the internal development of Codebots. Our team working on Springbot made great use of tech spikes and risk ratings when implementing relationships to the bot. They found it useful when estimating for features when they were unsure of the details or technologies. It can provide the insight into if a tech spike is required or just more research and time allocated. In the case of our relationship implementation, the idea wasn't completely foreign to the team so when it came to assigning a risk they discovered (while the risk score was just below an 8), extra time was needed to be allocated to ensure any unknowns could be handled within the expected delivery timeframe. This ended up being extremely valuable to the team as throughout the implementation, as issues cropped up during development. If it weren't for the appropriate rating of risk and allocation of time, deadlines would have been missed.