Securely Building Ai That Builds Ai

Artificial intelligence, at any level of understanding, is intellectually interesting, but mostly philosophically (and at times, ethically) uncertain.

I have a psychology background, and when I was researching this topic, all I could think about was the parallel I could draw between the human brain and an artificial neural network (then I discovered it is because neural networks are designed on the brain!).

In psychology, the brain is often referred to as a “black box” – meaning we have no clue what goes on in there, in an absolute manner. Sure, we can run fMRIs and EEGs on the brain itself, but at the end of the day, we are inferring based on years of research and studies.

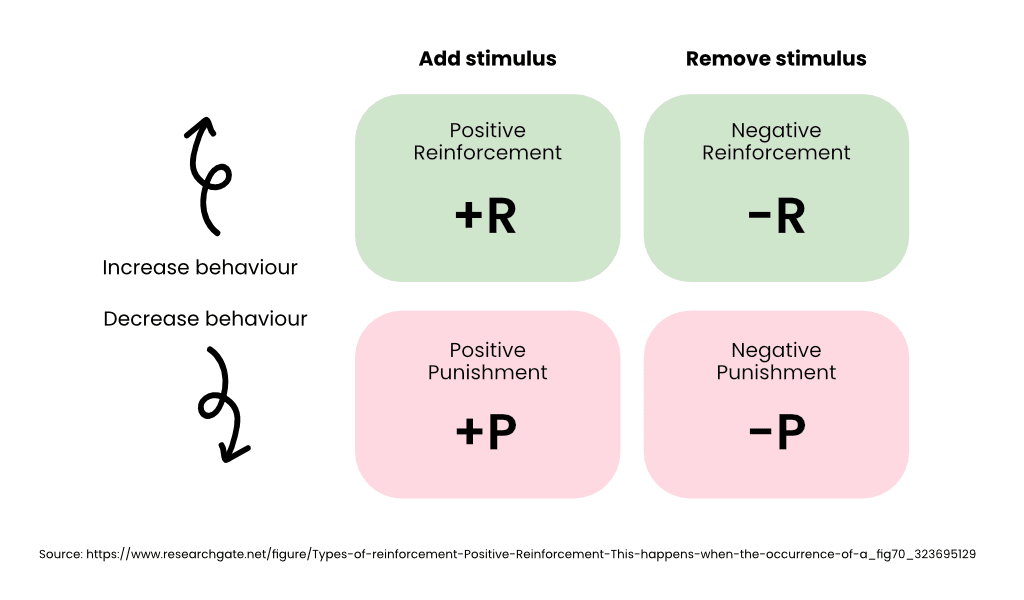

Progressing this metaphor, if we think about behaviour and how we as humans learn, at a very basic level, we refer to Pavlov’s dogs in classical conditioning or the concept of positive reinforcement and negative punishment in operant conditioning.

When we as humans learn new behaviours, we have the above framework influencing our decisions to conduct those behaviours until we no longer have to think about them (to a certain extent of course). For example, driving a car. When we were teenagers with our learner’s permit, the act of driving a car was an intense learning experience. Although, after progressing through the levels of those licences this behaviour is something we might call “second nature”.

What progresses this intense experience to second nature?

It is in the push and pull of the consequences our behaviour produces. In the learning to drive example, a positive reinforcement might be passing the driving test to be able to drive unsupervised. On the flip side, a negative punishment might be the loss of that licence due to unlawful driving behaviour.

Cognitively speaking, we don’t really know what happens in the brain to code the good and the bad to therefore shape our behaviour, but we do know that is happens through this kind of operant conditioning.

I am sure you have come across this concept on numerous occasions, so I won’t delve into any further into examples. The important thing here is to draw the similarity between what we input into systems (organic or man-made) and what we get out of them – without really knowing what happens in the “in-between”.

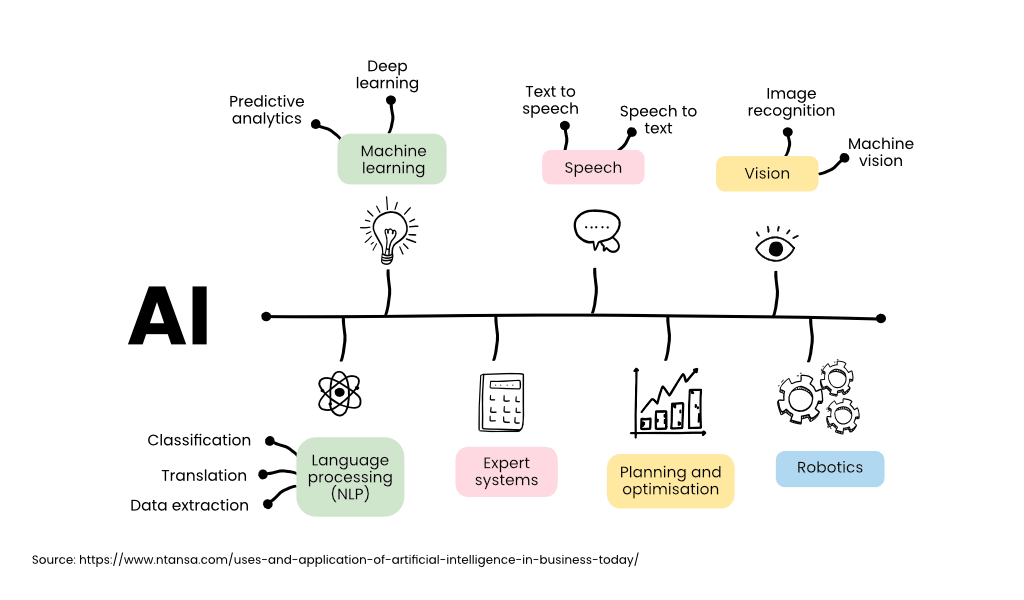

Artificial intelligence (AI) is when computers or robots are programmed to perform tasks that normally need human intelligence, like learning or solving problems. (Britannica, 2024). Although, with what I have presented in the beginning of this article, this overarching definition might be too broad, as it hasn’t touched on the complexity involved with networks.

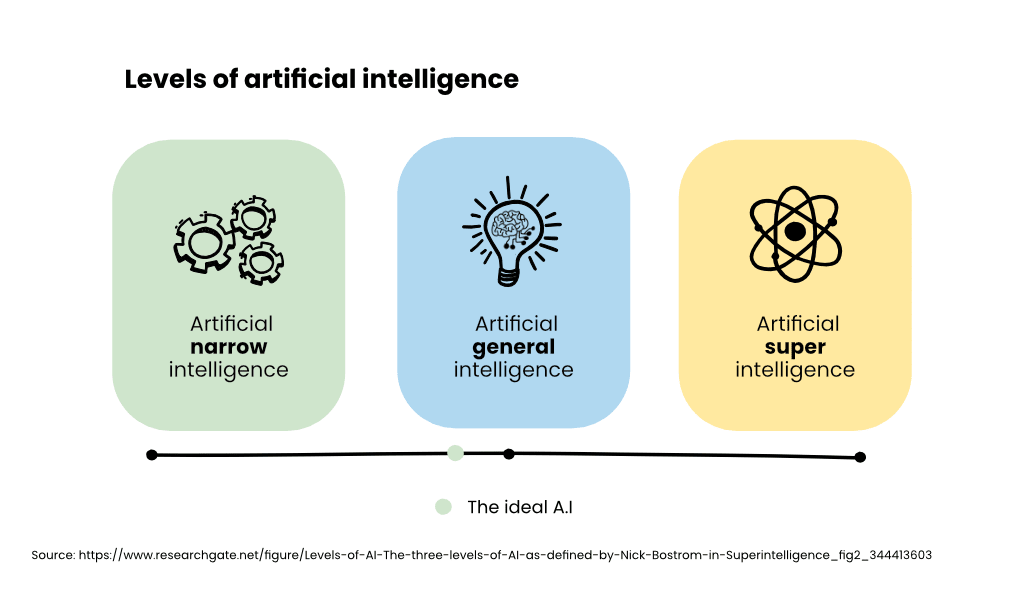

Re-introducing the concept of neural networks and how that relates to AI, we need to consider that AI is not a singular school of thought, there are multiple levels to acknowledge.

The 3 levels of AI, as depicted by Codebots are:

- Artificial Narrow Intelligence

- Artificial General Intelligence

- Artificial Super Intelligence

Deep learning sits in level 2 (hey look a brain image – can’t think why that might be!). Deep learning is actually developed with the brain in mind (which is why I chose a psychological metaphor for this article).

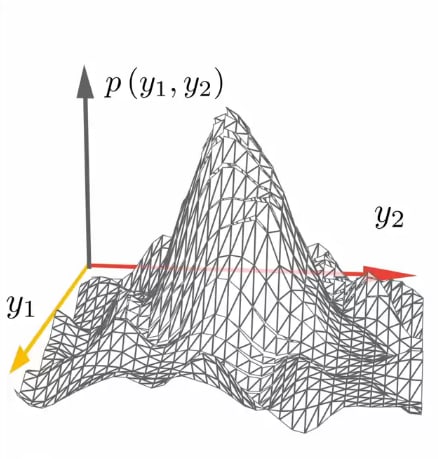

If it is not graphically represented in an organic way, another way its complexity can be demonstrated is through a network of data points.

Looking at this graph, we can see there are a lot of fluctuations and patterns all within a set of parameters. For any math geek out there or stats enthusiast, this doesn’t look too hectic in the sense that it is contained and somewhat predictable. Although, its predictability comes from the parameters being set, and the outputs being idealised.

It is interesting, that with this ‘artificial’ intelligence, we still want (and need) to be in control of the outputs.

Deep learning is still in a relatively primitive state, therefore it is quite immature and learning constantly. The (ideal) optimal state of operation would be for neural networks to produce results as efficiently as the human brain. Sure, we have computers and networks that are able to produce results in milliseconds. Google’s search engine is a perfect example of this. What a neural network will do on top of that is to continue to learn from every input, search process and consequential outputs, to start building a system that operates within itself.

Operates within itself…

When I was reading more and more about deep learning and neural networks, I was struggling to grasp with the ethical issues, or governing issues, that could arise with this level of artificial intelligence.

Red flags everywhere!

Essentially, we are creating the foundations for a system to build its own bigger system and we are launching this into the wild.

My red flags:

Bias

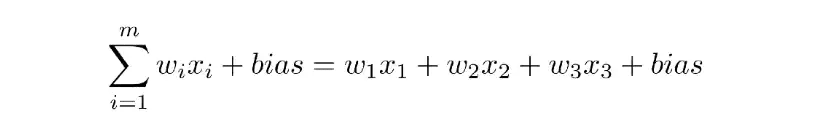

This is the equation to which neural networks operate with. Notice the bias weight? This could be anything, gender, racial, intellectual, etc. My issue with it is, if artificial intelligence, ideally, is to remove the human factor (which, more often than not, this bias is present), so why are we adding this bias weight to both sides of the equation?

Development of efficiencies

Earlier I mentioned that in learning behaviours we, as humans, develop efficiencies. One of the potential dilemmas that might arise from is that neural networks may (or will) learn new behaviours of performing tasks. This might sound like a natural progression, and in some cases, this will be the reason we use systems such as this.

The counterargument here is when there are specific methods to achieving an output, but in place for an array of reasons. One of these reasons could be as blatant as legal restrictions. Sure, there are parameters we can input into these systems that can restrict the network to produce an output that is legal (in this case). The issue lies when the neural network works out how to work around these parameters to produce the output.

This is quite a philosophical argument and there are many of opinion pieces available to the curious public. Fair warning though, it starts to sound like a chicken and egg situation very quickly!!

I like to think about it with an example – and because we have used the car example previously, I will stay on that avenue.

When you get in the car, you pop your seatbelt on and then start driving. Whether you are a seat belt on, then ignition kind of person, or the other way round, the seat belt secured before actively driving the car is the process we follow as safe individuals.

So, what if you combined those actions; seat belt on whilst driving? Sure, it would be more efficient, but who’s to say you are protected from an unfortunate event such as another car colliding with yours before your seat belt is on, therefore you are not secured?

This is when the intended method, should not be worked around.

Switching it off

My final red flag didn’t come from researching, it came from the great conversations I have with my peers and colleagues. One of our major concerns that arises from this advancement is the ability (or lack thereof) to switch it off.

Logically speaking, you should be able to “pull the plug” on anything electronic. Although, a self-teaching neural network might be able to teach itself to exist in another space (like a virus) and be reactivated in another space. Scary stuff…

This article isn’t meant to scare anyone into switching off their devices and getting off the grid, this technology is so many years away (maybe not even in our lifetimes). The positive to take from this is that there is so much discussion, theories and hypotheses that are constantly de-risking and presenting cyber-secure frameworks so when this technological advancement launches, we will be ready!!

.png)